Containers in the Cloud – Elastic Infrastructure Explained

Discover elastic cloud infrastructure containers and services: boost scalability, security, and efficiency for modern clou...

Discover elastic cloud infrastructure containers and services: boost scalability, security, and efficiency for modern clou...

Elastic cloud infrastructure containers and services are the foundation of modern, scalable business applications. If you're looking to understand what these technologies are and how they work together, here's a quick overview:

| Key Component | Description | Business Benefit |

|---|---|---|

| Elastic Infrastructure | Cloud resources that automatically scale up or down based on demand | Pay only for what you use; handle unpredictable traffic |

| Containers | Lightweight, portable application packages (like Docker) | Consistent deployment across environments; faster startup |

| Orchestration Services | Managed platforms like Amazon ECS, EKS, or Google GKE | Automated scaling, healing, and deployment without infrastructure management |

| Serverless Options | Services like AWS Fargate that remove server management | Focus on applications instead of infrastructure; true consumption-based billing |

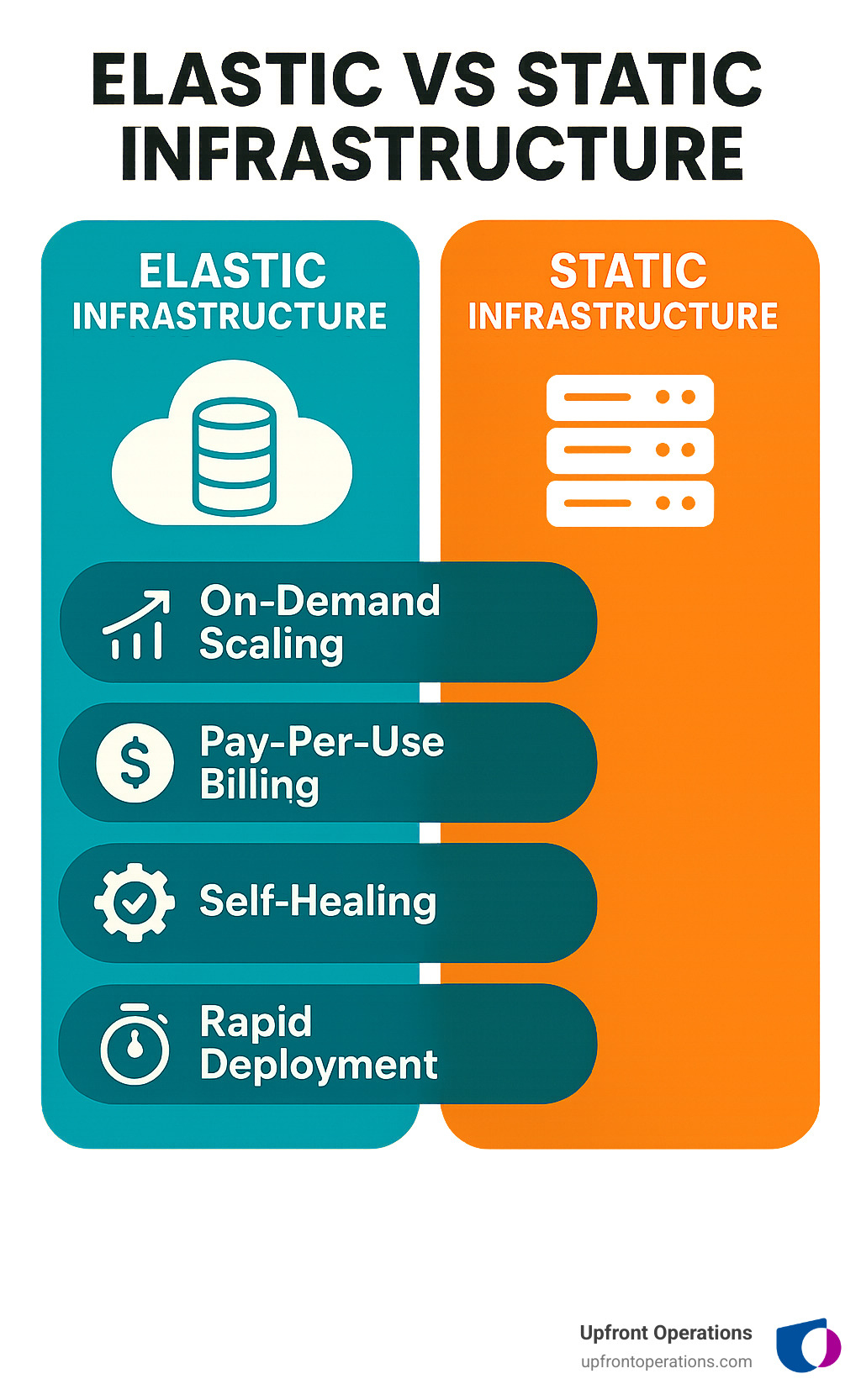

Elastic cloud infrastructure combines on-demand computing power with containerization to create systems that automatically adjust to workload demands. Unlike traditional infrastructure with fixed capacity, elastic systems can:

Nearly 80% of containerized applications in the cloud now run on AWS, with Amazon ECS customers experiencing 150% year-over-year growth in container services usage. This explosive adoption reflects how these technologies are changing business operations, as documented in the CNCF's annual survey.

I'm Ryan T. Murphy, founder of UpfrontOps, and I've spent over a decade helping businesses implement elastic cloud infrastructure containers and services to reduce operational overhead and accelerate growth through smart automation and streamlined systems.

Elastic cloud infrastructure containers and services vocabulary:- cloud infrastructure and platform services- cloud network monitoring- SD-WAN solutions comparison

At its core, elastic cloud infrastructure containers and services represent a fundamental shift in how modern businesses build and deploy applications. Let's unpack the building blocks that make this technology so powerful:

Think of elastic cloud infrastructure as your business's on-demand computing engine. Unlike traditional setups where you're stuck with fixed-capacity servers (whether you use them or not), elastic infrastructure breathes with your business needs – expanding during busy periods and contracting when things slow down.

When you accept elastic cloud infrastructure, you're getting:

Resource elasticity that adjusts computing power in real-time as your needs change. Just like Upfront Operations' microservices that scale with your business growth without overcommitting resources.

Pay-as-you-go billing that ensures you're only charged for what you actually use. This mirrors how Upfront Operations delivers on-demand websites and business email services – you pay for value, not idle capacity.

Multi-AZ resilience that spreads your workloads across multiple availability zones, keeping your business running even if one zone experiences issues.

API-driven provisioning that lets you create, modify, and remove infrastructure programmatically – similar to how Upfront Operations' CRM optimization services automate sales processes.

Ford Motor Company finded this when building their Search Center of Excellence with Elastic Cloud on Google Cloud. They gained the ability to provision clusters on demand across global operations, monitoring both cloud infrastructure and vehicle data through one unified platform.

Containers are like the perfect travel companions for elastic infrastructure. They neatly package your applications and dependencies into standardized units that run consistently everywhere – from a developer's laptop to your production environment.

Docker images serve as self-contained application packages with everything needed to run included. This standardization is similar to how Upfront Operations delivers ready-to-use microservices that work right out of the box.

Micro-services architecture breaks applications into smaller, independently deployable pieces – exactly how Upfront Operations delivers targeted sales operations support without forcing you to buy more than you need.

Task definitions specify exactly how containers should run, defining CPU, memory, and networking requirements. This clarity ensures predictable performance, much like Upfront Operations' transparent service offerings.

Service findy mechanisms enable containers to locate and communicate with each other automatically, creating seamless experiences similar to how Upfront Operations' fractional sales-ops experts integrate with your existing teams.

Cisco demonstrated the power of this approach when they chose Elastic to power their enterprise search platform. Their containerized services dramatically improved customer service accuracy by efficiently resolving support tickets through their search infrastructure.

By embracing elastic cloud infrastructure containers and services, businesses can achieve the same on-demand flexibility that makes Upfront Operations' microservices so valuable – scaling precisely with demand while maintaining cost efficiency and performance.

When considering elastic cloud infrastructure containers and services, businesses need to evaluate the complete picture – from benefits to potential challenges. For a broader industry snapshot, the annual CNCF Cloud Native Survey offers valuable statistics on adoption trends.

Containers are the perfect match for elastic cloud environments, and for good reason. Their fast startup times mean your systems can scale up in seconds when traffic spikes – imagine your website handling a sudden influx of visitors without breaking a sweat!

Immutable artifacts might sound technical, but it's actually a simple concept with huge benefits. Your container images stay exactly the same across environments, meaning what works in testing will work in production. No more late-night emergencies because something changed unexpectedly.

One Elastic customer put it perfectly: "Provisioning identical development, staging, and production environments quickly" eliminated those frustrating "it works on my machine" conversations that waste valuable development time.

The environment parity containers provide is like having perfect clones of your application running everywhere. And because containers share the host OS kernel, they're incredibly resource efficient – you'll get more bang for your infrastructure buck compared to traditional VMs.

Security in containerized environments isn't just an afterthought – it's woven into the fabric of how these systems work.

Namespace isolation keeps containers in their own little worlds, preventing one compromised container from seeing or affecting others. Think of it as having separate rooms in a house, where what happens in one room stays there.

The principle of IAM least-privilege means your containers only get access to exactly what they need – nothing more. It's like giving employees keys only to the rooms they need for their job, rather than master keys to the whole building.

Elastic's approach demonstrates security best practices by "isolating services and limiting each container's permissions to only required system areas" while using TLS-everywhere to ensure all communication between containers remains private and encrypted.

One security pattern we at Upfront Operations strongly recommend is "assuming breach of any single service and limiting its system access to contain compromise" – in other words, designing your system so that even if one part is compromised, the damage is contained.

The economics of elastic cloud infrastructure containers and services offer refreshing flexibility compared to traditional infrastructure.

With AWS Fargate pricing, you'll only pay for the exact vCPU and memory resources your containers actually use. It's like paying for electricity only when the lights are on – a far cry from the old days of paying for idle servers.

For non-critical workloads, spot instances can slash your costs by up to 90% by utilizing excess cloud capacity. Imagine getting hotel rooms at steep discounts during off-peak seasons – that's the kind of savings we're talking about.

Many providers offer sustained-use discounts that kick in automatically when you use services consistently. And because containers make resource right-sizing much easier, you can match your infrastructure precisely to your actual needs.

For example, a 32GB RAM Elasticsearch cluster typically receives twice the CPU allocation of a 16GB cluster, showing how resources can scale proportionally with your workload requirements – no waste, no shortage.

While the benefits are substantial, scaling containerized services comes with challenges you should prepare for.

Container sprawl happens as your services multiply. Suddenly managing hundreds or thousands of containers becomes like herding cats without proper systems in place.

Image drift occurs when container images become inconsistent across services – imagine if some of your team were working from an outdated playbook while others had the latest version.

Understanding performance across distributed services requires specialized monitoring tools to avoid observability gaps. Without them, troubleshooting becomes like finding a needle in a haystack.

One operations team finded the hard way how noisy neighbor scenarios can impact system performance when "one service can starve others if not contained." It's similar to how one loud apartment can disrupt an entire building – proper resource allocation is essential to maintain harmony.

At Upfront Operations, we've seen how these on-demand containerized services can transform businesses of all sizes. Whether you need simple microservices like websites and business email that spin up instantly, or you're looking for ways to optimize your CRM and sales pipelines with elastic infrastructure, the right approach makes all the difference in scaling efficiently and cost-effectively.

When implementing elastic cloud infrastructure containers and services, choosing the right orchestration platform is a critical decision. Let's compare the major options:

| Feature | Amazon ECS | Amazon EKS | Google GKE | Elastic Cloud |

|---|---|---|---|---|

| Control Plane Management | Fully managed by AWS | Managed Kubernetes control plane | Managed Kubernetes control plane | Fully managed by Elastic |

| Launch Types | EC2 and Fargate | EC2 and Fargate | Standard, Autopilot | Cloud provider VMs |

| Open Source Alignment | Proprietary | Kubernetes (CNCF) | Kubernetes (CNCF) | Elastic Stack (Apache 2.0) |

| Hybrid Options | ECS Anywhere | EKS Anywhere | Anthos | Elastic Cloud Enterprise |

| Marketplace Availability | AWS Marketplace | AWS Marketplace | Google Cloud Marketplace | AWS, Google Cloud, Azure Marketplaces |

| Pricing Model | Pay for resources used | Control plane fee + resources used | Free control plane + resources used | Subscription based on deployment size |

Think of managed container orchestration as having a professional pit crew for your race car. You focus on driving (your business) while they handle all the maintenance and optimization behind the scenes.

With these platforms, you'll enjoy control plane SLAs that guarantee availability, zero-patching requirements that eliminate maintenance headaches, and declarative APIs that let you define what you want rather than how to do it.

Amazon ECS offers what they affectionately call an "autonomous control plane" – you never need to worry about managing nodes or third-party add-ons. This hands-off approach means your team can focus on creating value through applications instead of babysitting infrastructure. It's like having on-demand IT support that's always there but never in your way.

Each platform has its own personality that shapes your daily workflow – kind of like choosing between different car models for your daily commute.

ECS offers flexible network modes (awsvpc, bridge, host) that give you options for how containers connect. Meanwhile, Kubernetes-based platforms like EKS and GKE use a pod-based networking model that many developers find intuitive once they get used to it.

The add-on ecosystem varies significantly too. EKS and GKE tap into the vibrant Kubernetes community with thousands of extensions, while ECS offers deeper, more seamless AWS service integrations. As one platform migration report noted, "ECS's simplicity is a double-edged sword—what makes it easy at first creates complexity later," particularly when you're ready for advanced deployment strategies.

Upgrade flows also differ – Kubernetes platforms follow their community release cycle, while ECS marches to AWS's drum. This matters when planning maintenance windows and feature adoption timelines.

Picking the right platform is like choosing the perfect tool for a job – it depends on what you're building and who's doing the building.

If your team already speaks fluent Kubernetes, EKS or GKE will feel like home. The skillset match can dramatically reduce your learning curve and time-to-value. On the other hand, teams new to containerization often find ECS's simplicity refreshing.

Consider your comfort level with lock-in risk too. Proprietary platforms like ECS create stronger vendor relationships (or dependencies, depending on your perspective), while open standards like Kubernetes offer more flexibility to move between providers.

The ecosystem tools around each platform matter tremendously for day-to-day productivity. Think about how your existing monitoring, CI/CD, and security tools will integrate with each option.

At Upfront Operations, we've guided countless clients through this decision. For smaller businesses seeking simplicity and on-demand scalability, we often recommend ECS with Fargate – it's like having your infrastructure on autopilot. For larger enterprises with complex deployment patterns, we typically suggest Kubernetes-based solutions that offer the flexibility to grow without constraints.

Our on-demand microservices architecture relies on these elastic container services to deliver lightning-fast websites and business email solutions that can scale instantly with your needs. Whether you're a solopreneur launching your first professional site or an enterprise optimizing your CRM infrastructure, these container platforms provide the foundation that makes our responsive, elastic services possible.

As your containerized applications grow, elastic cloud infrastructure containers and services require more sophisticated operational patterns to keep everything running smoothly.

Let's face it – not everything can be stateless. Many real-world applications need to remember things!

When your containers need persistent data, you've got several solid options. Container Storage Interface (CSI) drivers create a standardized way for containers to interact with storage systems, regardless of the cloud provider. This means you can write your storage configuration once and use it everywhere.

Dynamic provisioning is another game-changer. Instead of manually creating storage volumes ahead of time, your containers can request storage on-demand, and the cloud infrastructure automatically creates it. This perfectly complements the elastic nature of container environments.

"We helped a client transition from pre-allocated storage to dynamic provisioning, cutting their storage costs by 42% while improving reliability," notes Ryan Murphy from Upfront Operations. "They were paying for storage they weren't using most of the time."

Data locality considerations become crucial at scale too. By placing containers physically close to their data, you can dramatically reduce latency – something that becomes increasingly important as your data grows.

Today's businesses rarely live in just one environment. They need flexibility across multiple clouds and on-premises systems.

ECS Anywhere and EKS Anywhere extend AWS container orchestration beyond the AWS cloud, letting you run your containerized workloads consistently on your own hardware. This is perfect for companies with significant existing infrastructure investments or specific compliance needs.

Google's Anthos takes a similar approach but works across multiple clouds – Google Cloud, AWS, Azure, and on-premises environments. This gives you tremendous flexibility while maintaining consistent operations.

One of our manufacturing clients uses containerized applications that seamlessly span their factory floor systems and cloud environments. Their quality control data flows through the same pipelines regardless of where it originates, giving them a unified view of operations.

Cross-cluster search technologies enable unified operations across deployments. Ford's Search Center of Excellence demonstrates this brilliantly, using containerized Elastic services to monitor both cloud infrastructure and vehicle telemetry through a single platform.

The real magic happens when you connect your containers to robust supporting systems.

Container registries like Amazon ECR or Google Container Registry serve as secure, version-controlled homes for your container images. AWS users alone perform over 14 billion weekly image pulls using ECR – that's how critical these registries are to modern deployment pipelines.

CI/CD pipelines automate the build, test, and deployment process. When a developer commits code, the pipeline automatically creates a new container image, runs tests, and deploys it if everything passes. This dramatically speeds up release cycles while maintaining quality.

For monitoring, tools like Prometheus, CloudWatch, and Stackdriver provide container-level metrics that help you understand performance and resource usage. But metrics alone aren't enough.

True observability requires distributed tracing through technologies like OpenTelemetry, which helps track requests as they flow through your microservices. This is invaluable when troubleshooting issues in complex systems.

"The difference between monitoring and observability is like the difference between knowing your car's speed and knowing why it's making that strange noise," as one of our engineers likes to say.

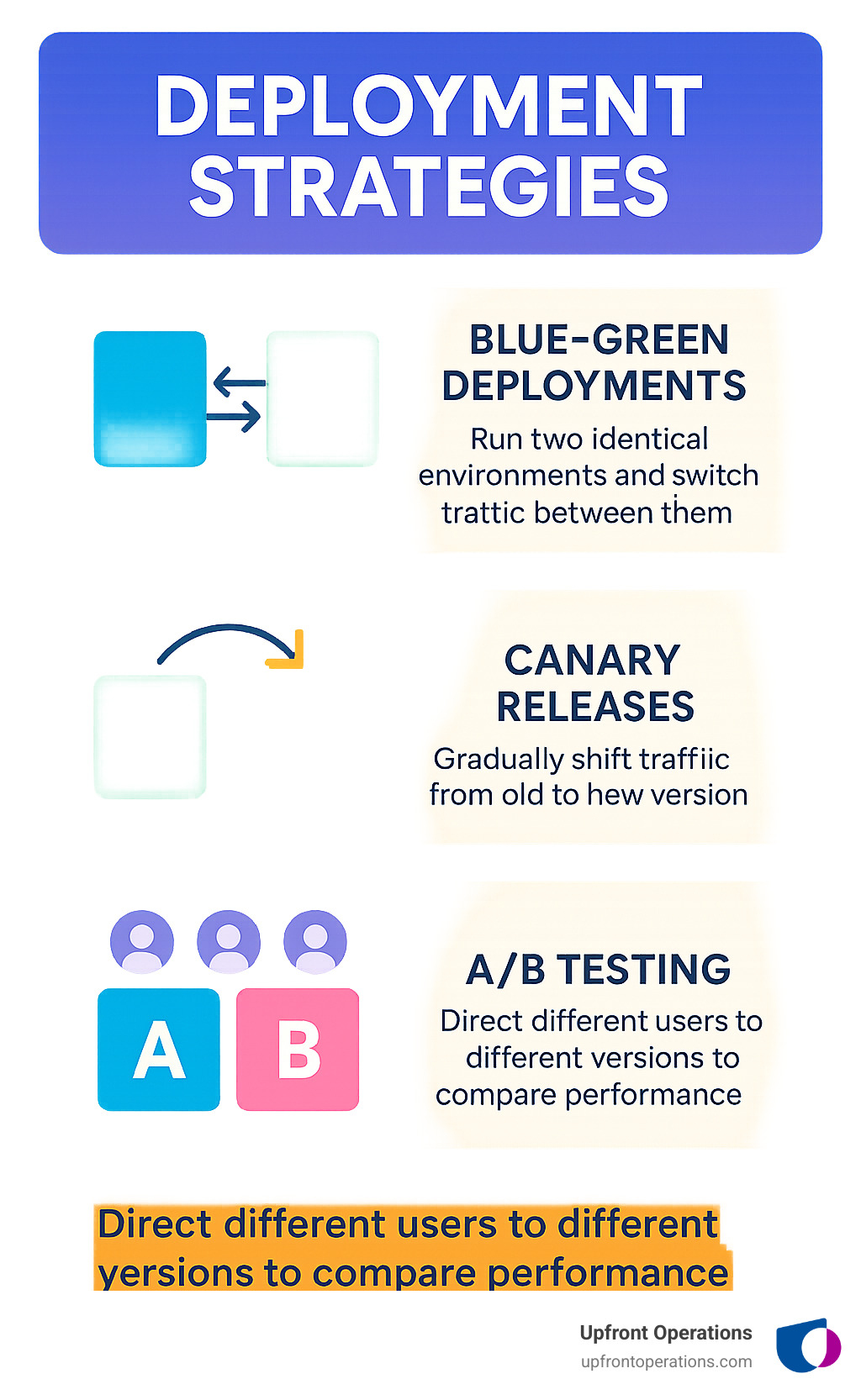

With containers, you can implement sophisticated deployment strategies that would be nearly impossible with traditional infrastructure.

Blue/green deployments run two identical environments simultaneously. When you're ready to release a new version, you simply switch traffic from the old (blue) environment to the new (green) one. If something goes wrong, you can instantly roll back by routing traffic back to blue.

Canary releases take a more gradual approach, slowly shifting traffic from old to new versions. This lets you test the waters with real users while limiting risk. Maybe 5% of users see the new version initially, then 10%, and so on as confidence builds.

A/B testing directs different users to different versions to compare performance or user behavior. This is fantastic for data-driven decision making – "do users prefer the new checkout flow or the old one?" becomes a question you can answer with real data.

It's worth noting that your platform choice affects how easily you can implement these strategies. "A canary deployment in Kubernetes is a documented pattern, whereas ECS requires more complex scripting and multiple AWS services," explains our cloud architect. This illustrates why platform selection should align with your deployment needs.

At Upfront Operations, we've helped businesses implement these advanced patterns using on-demand microservices that can scale instantly to meet traffic demands. Whether you're running a flash sale that brings 10x normal traffic or launching a new product feature to a subset of users, elastic cloud infrastructure containers and services provide the flexibility you need to grow confidently.

Transitioning to elastic cloud infrastructure containers and services is a journey that countless organizations have steerd successfully—and with good reason. Let's explore how businesses are making this shift and the real results they're achieving.

The path to containerization doesn't have to be all-or-nothing. Most successful migrations follow one of three approaches:

Lift-and-shift offers the gentlest entry point, where you simply package existing applications into containers without code changes. AWS App2Container exemplifies this approach, helping teams containerize existing .NET and Java applications while preserving their original functionality. It's like moving your house intact to a new neighborhood—same house, better location.

Re-platform involves modest modifications to better leverage container benefits. Think of it as renovating key rooms in your house during the move—keeping the structure but upgrading the kitchen and bathrooms.

Refactor represents the most transformative approach, rebuilding applications as true cloud-native microservices. One migration expert I worked with put it perfectly: "Breaking a monolith into microservices on ECS allowed our team to modernize incrementally without disrupting daily operations." It's like redesigning your home room by room while still living in it.

For larger organizations, a phased approach with Helm charts provides the perfect balance of standardization and flexibility, letting teams containerize at their own pace while maintaining consistent deployment patterns.

The numbers don't lie when it comes to the impact of elastic cloud infrastructure containers and services:

Elastic customers on Google Cloud are experiencing 73% faster search queries—imagine cutting a 10-second wait down to under 3 seconds! Support engineers are saving a staggering 5,000 hours monthly with containerized platforms, hours they now dedicate to innovation rather than maintenance.

AWS Fargate usage tells an equally compelling story, with usage tripling in just one year to reach 100 million tasks running weekly. Meanwhile, Amazon EKS usage grew 10x in a single year, demonstrating just how quickly businesses are embracing Kubernetes-orchestrated containers.

Cisco's experience particularly stands out. By implementing Elastic to power their enterprise search platform, they dramatically improved customer service accuracy through more efficient ticket resolution. Their containerized search infrastructure now connects support agents with solutions in seconds rather than minutes.

At Upfront Operations, we've seen similar changes when implementing on-demand microservices for sales operations functions. One client's CRM data processing went from overnight batch jobs to real-time updates, giving their sales team immediate insights into customer behavior.

Security in elastic environments works like layers of an onion. At the core, containers are isolated through Linux namespaces and cgroups—essentially giving each container its own private view of the system. Network security adds another layer with traffic filters and security groups controlling what can communicate with your containers.

IAM roles with least-privilege permissions ensure containers can only access what they absolutely need. As Elastic demonstrates by "using Stunnel to enforce TLS even when individual services lack native support," encryption between services provides yet another security layer.

The beauty of managed platforms is automatic security patching—you're always running the latest, most secure version of the underlying platform without lifting a finger.

Yes, you can—but should you? Container-based databases work well with persistent volume claims that ensure your data survives container restarts. However, there are important considerations:

For mission-critical workloads, managed database services often provide better reliability and less operational overhead. I've found that a hybrid approach typically works best: stateless application containers paired with managed database services for persistent data.

One client tried running their entire PostgreSQL database fleet in containers but found that the performance variability wasn't worth the flexibility gains. They ultimately moved to Amazon RDS for production while keeping containerized databases for development and testing.

This is like asking "how much does a house cost?"—it depends on size, location, and features! For containers, cost varies based on:

Container size matters tremendously—a microservice needing 0.25 CPU and 0.5GB RAM costs far less than one requiring 2 CPUs and 8GB RAM. Traffic patterns determine how often your containers scale up and down, while storage requirements and data transfer add additional costs.

For a ballpark estimate, 100 small microservices on AWS Fargate might cost $5,000-$10,000 monthly, but I've seen similar setups run for half that when optimized for cost. The pay-as-you-go model means your bill scales with actual usage—a lifesaver for seasonal businesses.

Implementing elastic cloud infrastructure containers and services requires both technical know-how and business alignment. At Upfront Operations, we specialize in helping businesses steer this journey with our on-demand microservices and fractional sales operations expertise.

Whether you need simple microservices like business email and websites delivered on-demand, or more complex CRM optimization and pipeline acceleration, our New York-based team provides the expertise you need without the overhead of traditional agencies.

We focus on essential services that scale with your business, using the same elastic principles we've discussed throughout this article. By implementing cloud-native patterns and containerized services, we help businesses close deals faster and scale smoothly.

Ready to see how elastic cloud infrastructure can transform your operations? Learn more about our cloud-ready services designed with the perfect blend of technology and human expertise to boost your growth.

Remember: in today's business world, the ability to scale resources on demand isn't just a technical advantage—it's a competitive necessity. Elastic cloud infrastructure containers and services provide the foundation for building agile, resilient systems that grow with your business, turning infrastructure from a constraint into a catalyst for growth.